| Issue |

Acta Acust.

Volume 9, 2025

|

|

|---|---|---|

| Article Number | 46 | |

| Number of page(s) | 30 | |

| Section | Inverse Problems in Acoustics | |

| DOI | https://doi.org/10.1051/aacus/2025030 | |

| Published online | 24 July 2025 | |

Scientific Article

Data-driven and physics-constrained acoustic holography based on optimizer unrolling

1

University of Music and Performing Arts Graz 8010 Austria

2

Institute of Electronic Music and Acoustics, University of Music and Performing Arts Graz 8010 Austria

* Corresponding author: manuel.pagavino@gmail.com

Received:

4

December

2024

Accepted:

23

June

2025

Nearfield Acoustic Holography (NAH) retrieves vibro-acoustic patterns of sound sources from non-contact measurements of sound in their proximity. NAH obtains images of structural vibrations to analyze the underlying acoustic phenomena. Holographic problems are typically ill-posed and yield infinitely many solutions. Unique solutions are obtained by optimizing a cost function that targets an approximate solution obeying the laws of physics while simultaneously satisfying constraints that represent prior knowledge characterizing the expected result. Which constraints to choose is highly critical for success, and yet the most challenging question to answer. Accuracy fluctuates with the quantity and the quality of these constraints and requires skillful formulation and tuning. Despite ongoing research on novel constraints and parameter tuning methods, as well as rapid advancements in Deep Learning, the state-of-the-art still exhibits substantial deficiencies. As the proposed solution, this article studies a Variational Network for NAH with the idea to fuse physical knowledge with data-driven modeling. The network retrieves the strengths of equivalent sources from measurements by unrolling an iterative optimizer, whose regularizing parameters are inferred via supervised learning. The proposed method outperforms established solvers in a comparative study, using both simulated and real-world data, and it generalizes well to unseen vibration patterns.

Key words: Nearfield Acoustic Holography / Equivalent source method / Data-driven / Physics-constrained / Variational network

© The Author(s), Published by EDP Sciences, 2025

This is an Open Access article distributed under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This is an Open Access article distributed under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

1 Introduction

Nearfield Acoustic Holography (NAH) retrieves structural vibration patterns from contactless measurements in the proximity of the vibrating surface using microphone arrays. With the advent and rise of NAH [1, 2] in the 1980s, the inverse sound propagation problem was first described on the basis of the (fast) Fourier transformation on rectangular, flat measurement grids to cope with computational complexity, however implying a periodic spatial repetition and full coverage of the vibration pattern along a flat surface. Another set of methods extended the plane wave expansions of classical NAH to cylindrical or spherical coordinates, e.g. Statistically Optimized NAH (SONAH) [3] and Helmholtz Equation Least Squares (HELS) [4], which are applied on local measurement patches to compensate for the decreased computational efficiency. The Equivalent Source Method (ESM) [5] was later introduced as a versatile, discrete sound propagation model, whose full matrix inversion was enabled by an increase in available computational resources. ESM permits to deal with more arbitrary surface shapes, and the Inverse Boundary Element Method (IBEM) [6–9] could be proposed as a more accurate, quasi-continuous alternative. All of these exemplary achievements apply numerical regularization of the ill-posed sound propagation terms involved [10], e.g. by Tikhonov functional minimization via ℓ2-norm penalty [11], and hereby have to give up on resolving propagating components that are too weak, which causes a blurry image of the surface vibration at low frequencies. And at high frequencies, spatial aliasing causes interference patterns in the image.

In the past decade, methods based on Compressive Sensing (CS) [12–16] have become increasingly popular, as they help to circumvent the inherent spatial blur and moreover allow to relax requirements on spatial sampling. CS requires the surface vibration patterns to be inherently sparse, either directly in space or in a spatial transformation domain [17–29]. Approximate sparsity is commonly enforced by means of ℓ1-norm minimization, which requires non-linear optimization algorithms that trade off between data consistency and sparsity constraint [30]. To guarantee perfect retrieval, the model matrix describing the acquisition process must be sufficiently incoherent [21, 27], which is usually achieved by use of pseudo-random array geometries [18, 19, 21–29, 31]. The use of sparsity has been explored in various domains, as it has been directly applied in the spatial domain [27], in the wavenumber domain (k-space) [21], on modal eigenfunctions [18, 19, 23, 24, 26, 28, 32], and spatial derivatives [25, 33]. While these methods are quite successful and they permit to adapt the solution to the expected source geometries, it is frequently difficult to make a prior choice of the particular criterion that geometrically shapes the solution, as a so-called regularizer. Moreover, spatially extended sources may not always meet a single choice of spatial sparsity condition, and one common sparsifying transformation is often too simplistic. Although CS-based NAH may still be rather successful for sound field retrieval [22], it may also yield non-physical source representations and introduce artifacts, resulting in high reconstruction errors.

In most recent years, Deep Learning [34] has emerged as a new paradigm to solve inverse problems in various domains of imaging, achieving state-of-the-art results in several tasks such as deblurring and deconvolution [35, 36], denoising and impainting [37], super-resolution [38] and CS [39, 40], and it has only recently been introduced in NAH [41–46]. Rather than relying on handcrafted models, these supervised data-driven approaches exploit deep neural networks that, in theory, can learn to approximate any mapping to arbitrary accuracy without the need to formalize prior knowledge and expectations into cost functions. They learn from generic data describing the problem, which avoids leaving optimal parameter and shape decisions up to the user. However, naive application may lack data consistency and the inclusion of acoustic knowledge, so that it rather yields black-box architectures that detach design and training from the known physics of the problem. Ongoing efforts try to improve the explainability of opaque models, such as the Gradient-weighted Class Activation Mapping (Grad-CAM) approach [47]. While this approach yields heatmaps revealing the contributions of the most important input features for the model output, it remains challenging to analyze, interpret, and take responsibility for the network's decisions. Concerning the training effort, exclusion of the scholarly knowledge may yield an overly long training process and requires massive datasets for successful and consistent performance.

For the problem at hand, this justifies putting an increased effort into the question of how to incorporate physical properties of the sound field into learning-based methods, with the idea to enforce fundamental acoustic laws while making neural network decisions more explainable and transparent. Recent literature in sound field estimation [48] differentiates between Physics-Constrained (PCNNs) and Physics-Informed Neural Networks (PINNs) as the two main strategies to embed acoustical knowledge in the architecture and training of the neural network.

On the one hand, PCNNs directly predict as the output the basis function coefficients of discrete and finite wave expansions, e.g. plane waves of the Fourier NAH [49], spherical waves of HELS [50], or equivalent source distributions of the ESM [51], using elementary solutions to the governing Partial Differential Equations (PDEs) of the wave equation. In this way, the learned basis function expansions strictly satisfy the governing PDEs, even if black-box networks are used as the predictors of their coefficients. PCNNs are usually trained on supervised data pairs from which the network learns to infer a model for the given output coefficients to the given input measurement data. The incorporation of elementary solutions is expected to improve estimation accuracy during inference.

On the other hand, PINNs were first introduced to solve forward and inverse PDEs by using the implicit neural representation [52–54] to parametrize the sound field (or other quantities) as a continuous function f(r) in space r=[x,y,z]T to the estimated scalar value g≈f that approximates f at the given coordinates. PINNs learn to represent functions continuously, solely by being trained on discrete samples of these functions. They typically incorporate the physical knowledge encoded by the governing PDEs into the loss function that guides the training process. PINNs based on implicit neural representations are usually trained unsupervised, only using the measurement data without ground truth for training. This makes training feasible when ground truth data is not accessible, which is common for NAH. In that sense, PINNs are trained to fit the measured data while minimizing the deviation from the governing PDEs, such that the estimated sound field approximately satisfies the PDEs. Due to the implicit neural representation, the PDE constraint involved can be evaluated in other locations as the measurement points, which allows implementation of the governing PDEs at high resolution and improves approximation of partial derivatives therein. Ever since their advent, PINNs have been applied in various forms for NAH [55, 56] and sound field estimation in the time [57, 58] and frequency domain [54, 59–62].

This taxonomy of physics-aware approaches greatly helps the discussion but should not be regared as strictly exclusive classification because some methods may meet criteria of both categories. For instance, the Point Neuron Network (PNN) in [54] relies on unsupervised training to learn an implicit neural representation of the sound field, thus can be seen as PINN. As the PNN design does not encode physical knowledge in the loss function but in the architecture, with network weights for strengths and positions of equivalent sources, it can be seen as PCNN. Note that the taxonomy does not make a difference between black-box networks [41–46, 55, 56] and physics-guided architectural designs [54], which incorporate physical models into their neural structure, explicitly.

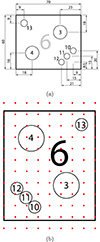

This paper proposes the use of a PCNN with physics-guided architecture, based on an unrolled optimizer that estimates the NAH image. In particular, a Variational Network (VN) [63–67] is explored as a generative tool for sound source and field retrieval. The proposed VN is built on an unrolled gradient descent scheme with known and learnable parameters that involves generalized data consistency and regularization costs. Unlike typical PCNNs, where only the final network output represents coefficients of the physical basis functions, each VN layer originates from a gradient-descent iteration, returning intermediate coefficients of the equivalent sources as a holographic image. Every VN layer can therefore be regarded a small PCNN. And similar as in [54], the VN architecture directly incorporates the free-space Green's functions as physical fundamental solutions representing the equivalent sources. In contrast to hidden, latent layers, which dominate many neural approaches to NAH [41–46] but are devoid to direct interpretation even if physics are considered [55, 56], the proposed VN is transparent by design: Each layer along the unrolled VN is not latent but delivers a manifest holographic image. The similarity to structurally unrolled iterative solving (see Fig. 1) makes improvement across the manifest layers obvious, providing insights into the VN's decision-making: Improvements of the image are expected to permit interpretation [63–66, 68, 69] in the sense that regularizing constraints as well as domain transformations should be recognized as characteristic improvement patterns across the layers, e.g. relating to sparsity-inducing norms and spatial derivative filters, see also [25, 33]. As the holographic image consists of the strengths of equivalent sources whose radiated sound should not differ much from the input (measurement) data, the VN repeatedly enforces data consistency by penalizing deviations, in every layer. To take into account the complex-valued nature of NAH data, the proposed VN is supposed to perform fully complex-valued operations [56, 70], rather than relying only on magnitude information [41, 42] or treating real and imaginary part separately by stacking them into a real-valued supervector [55]. Ideally, the trained VN should be able to compensate for missing or corrupted information, to find the optimal regularization, and to operate beyond the spatial aliasing limit. For supervised learning, the idea is to present it with a few data sets representative for a large number of vibration images and acquisition setups, in the hope for the network to generalize well to different vibration patterns, changes in measurement distances, and noise levels. Adapting the physical constraints should help minimize the need for problem-dependent regularization or re-training. Geometrically, it is helpful to permit 2D convolutional filter kernels and transformations, and hereby to assume a flat plane for the image/equivalent sources, sampled in a Cartesian (x,y) grid of uniform Δx and Δy increments, parallel to the measurement plane.

|

Figure 1. Schematic iteration/layer representation of an unrolled gradient descent on a single model mismatch and regularization cost that forms the basis of the Variational Network (VN), showing its structural similarity to a typical residual neural network (ResNet). |

The paper is structured as follows: In Section 2, the ESM and its relation to the forward and inverse problem are explained, along with its inherent ill-posedness and the optimization theory necessary for mitigating its effects to obtain valid solutions. Section 3 recalls the theory behind ill-posed inversion by constrained model fitting and presents some established solvers of NAH in Section 3.2 that are used as benchmarks in this study. Building on this foundation, the VN architecture is derived in Section 4.1, and the PNN in [54] is briefly reviewed in Section 4.2, as it is closely related to the proposed method and thus serves as one more suitable benchmark. Relevant implementation details are presented in Section 5. In Section 6, a simulation study is conducted to investigate accuracy, generalization, robustness, and wideband capabilities of the proposed method by comparing it against the established handcrafted and neural solvers. The study uses spatially extended and compact test sources, including the vibrating plate in Section 6.1, the baffled piston in Section 6.2, as well as the quadrupole in Section 6.3, each representing the contrasting phenomena of smooth variations, constant in-phase vibration and sparsity. Moreover, the iterative nature of the VN allows to observe the holographic image as it evolves across layers/iterations from crude initial approximation to its final outcome, which is investigated in Section 6.4 in a greater detail for the baffled piston example. Section 7 entails an experimental validation of the method to explore its performance under real-world conditions. The experimental source reconstruction concerns a custom-made box with a dispersed loudspeaker layout.

2 Equivalent Source Method (ESM)

We use the Equivalent Source Method (ESM) [5, 71] to model the sound pressure field  of a source sampled in its immediate nearfield across space r=[x,y,z]T in the frequency domain using a planar microphone array of M=Mx·My microphones distributed over the measurement surface SM. According to the ESM, the sound pressure p(rm)=pm at the m-th microphone position rm=[xm,ym,zm]T can be modeled by superimposing the radiated sound fields of a distribution of N=Nx·Ny elementary sources with the weights

of a source sampled in its immediate nearfield across space r=[x,y,z]T in the frequency domain using a planar microphone array of M=Mx·My microphones distributed over the measurement surface SM. According to the ESM, the sound pressure p(rm)=pm at the m-th microphone position rm=[xm,ym,zm]T can be modeled by superimposing the radiated sound fields of a distribution of N=Nx·Ny elementary sources with the weights  over the equivalent source surface S0, which may be retracted inwards from the structural surface S to prevent singularity. At the angular frequency ω, a single-layer potential driving monopoles (free-field Green's functions) models the sound pressure on the measurement plane, in the discrete-space domain,

over the equivalent source surface S0, which may be retracted inwards from the structural surface S to prevent singularity. At the angular frequency ω, a single-layer potential driving monopoles (free-field Green's functions) models the sound pressure on the measurement plane, in the discrete-space domain,

Here ρ is the medium density of air, q(r0,n)=qn is the complex source strength of the n-th equivalent source at r0,n=[x0,n,y0,n,z0,n]T, and

is the free-space Green's function that relates the radiated sound pressure at rm to the equivalent source at r0,n, where r=||rm−r0,n||2 is the Euclidean distance between field and source point. Using matrix notation, the problem results in a simple linear equation system

where ![$ {{\mathbf {p}}}=[p_1,p_2,\ldots , p_M]^T \in {\mathbb {C}}^M $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq6.gif) contains the discrete sound pressure samples pm of the M microphones,

contains the discrete sound pressure samples pm of the M microphones, ![$ {{\mathbf {q}}}=[q_1,q_2,\ldots , q_N]^T \in {\mathbb {C}}^N $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq7.gif) holds as the holographic image the unknown source strengths qn of the N equivalent sources, and

holds as the holographic image the unknown source strengths qn of the N equivalent sources, and  is the propagation matrix, whose entries are the free-field Green's functions in equation (2). The factor jωρ makes q express volume velocities as a measure of source strength, and it is incorporated in G, for brevity.

is the propagation matrix, whose entries are the free-field Green's functions in equation (2). The factor jωρ makes q express volume velocities as a measure of source strength, and it is incorporated in G, for brevity.

The holographic goal is to retrieve the equivalent source strengths q from the pressure measurements p by inverting equation (3). This inverse problem yields a source estimate  that can subsequently be used to reconstruct the sound field at any desired point in space outside the source volume by the forward problem equation (3). Correspondingly, the sound pressure pR, (normal) particle velocity vn,R, and intensity In,R reconstructed on the surface SR with zR>z0 become

that can subsequently be used to reconstruct the sound field at any desired point in space outside the source volume by the forward problem equation (3). Correspondingly, the sound pressure pR, (normal) particle velocity vn,R, and intensity In,R reconstructed on the surface SR with zR>z0 become

where GR defines the reconstruction matrix comprised of Green's functions evaluated along the reconstruction points rR,  is the normal derivative with regard to S applied on Green's functions, ⊙ indicates element-wise multiplication (Hadamard/Schur product) and the raised asterisk * denotes the complex conjugate. Note that there is no restriction on the number of reconstruction points rR, so that their number can be chosen in order to define the desired spatial resolution.

is the normal derivative with regard to S applied on Green's functions, ⊙ indicates element-wise multiplication (Hadamard/Schur product) and the raised asterisk * denotes the complex conjugate. Note that there is no restriction on the number of reconstruction points rR, so that their number can be chosen in order to define the desired spatial resolution.

3 Ill-posed inversion by constrained model fitting

In practice, the M array microphones that measure p are typically outnumbered by the N equivalent source strengths in q that shall be found. This gives rise to an underdetermined system in equation (3) (M<N) with infinitely many solutions that cannot be solved uniquely. Aliasing problems further increase the ambiguity of the solution. Moreover, the captured signals may be contaminated by noise and other imperfection. In addition, high-frequency vibrational information about the source, carried by evanescent waves that rapidly decay with distance, is lost exponentially the farther the measurement array is placed from the source. This spatial smoothing is modeled by the free-field Green's functions in equation (2), and it would cause an excessive noise boost if the propagator G were to be inverted rigorously, which raises a robustness problem, in practice. All these factors contribute to the ill-posedness of the inverse problem in the Hadamard sense [10], making some form of regularization indispensable and a pre-requisite for accurate reconstruction.

The retrieval of q can be cast into a constrained optimization task that allows to iteratively minimize the mismatch between sound field model and measurements. Additional constraints can be imposed to stabilize the problem. Hereby, the infinite set of possible solutions is restricted to one unique and meaningful estimate that best fits the constraints that aim to model the characteristics of the actual source, while being sufficiently robust to not overfit noisy measurements. One way this can be achieved is by early stopping [72] the iterative model fitting according to some predefined criterion, e.g. after a specified number of iterations. Another, more flexible approach extends the model mismatch to be minimized by an additional penalty term, the so-called regularizer, that constrains the solution in some domain to enforce expected structures or characteristics in the final reconstruction. This results in the variational minimization problem

where  is a data fidelity term quantifying the model mismatch by a scalar mismatch function

is a data fidelity term quantifying the model mismatch by a scalar mismatch function  , ℛ(Kq) is the regularizer, usually comprised of some linear transformation

, ℛ(Kq) is the regularizer, usually comprised of some linear transformation  and scalar penalty function

and scalar penalty function  , constraining the reconstruction by encoding prior knowledge or assumptions about the source, and λ is the trade-off parameter that controls the balance between minimizing the model mismatch and the constraint. Depending on the choice of K, regularization is either imposed directly on the solution estimate (K=I) or on some linear transformation thereof (K≠I).

, constraining the reconstruction by encoding prior knowledge or assumptions about the source, and λ is the trade-off parameter that controls the balance between minimizing the model mismatch and the constraint. Depending on the choice of K, regularization is either imposed directly on the solution estimate (K=I) or on some linear transformation thereof (K≠I).

Provided that the regularizer is a smooth, differentiable, and convex function, its gradient is defined everywhere, and the objective in equation (7) can be minimized iteratively by gradient descent

for an iteration index t≥0, given some initial q0, where the prime symbol ′ is used to signify the gradient based on the partial derivatives of the estimate q, so that ![$ f'(\cdot ) = \nabla _{{{\mathbf {q}}}} f(\cdot )=[\partial f(\cdot )/\partial q_1, \partial f(\cdot )/\partial q_2,.\ldots , \partial f(\cdot )/\partial q_N]: {\mathbb {C}}^N \mapsto {\mathbb {C}}^N $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq20.gif) , the superscript H denotes the Hermitian transpose, and the multiplier τ>0 is the step size by which the coefficients improve towards the negative gradient. A different step size may be allowed in successive iteration steps.

, the superscript H denotes the Hermitian transpose, and the multiplier τ>0 is the step size by which the coefficients improve towards the negative gradient. A different step size may be allowed in successive iteration steps.

3.1 Pre-conditioning

The ill-posedness of the inverse problem to equation (3) typically translates to a large condition number of the propagation matrix G, defined as the ratio between its maximum and minimum singular value, which makes the solution q highly sensitive to small deviations in the measured data p, e.g. due to noise or geometry mismatch. Although regularized iterative solvers as in equation (8) can bypass some of the adverse effects caused by the ill-conditioned matrix G, convergence may still be negatively affected or even infeasible. It is thus common to pre-condition [73] the linear system in equation (3) by transforming it into an equivalent system with more suitable properties for its iterative solution that is easier and cheaper to solve than the original system.

In general, this can be achieved in three ways, which all attempt to reduce the condition number and cluster the spectrum of G. With left pre-conditioning, the problem is modified as

where A is an invertible matrix called left pre-conditioner that operates on the sound field, and that is chosen such that the spectral properties of the factorized matrix A G yield more rapid convergence of the iterative solver. Note that A−1 is intended to loosely approximate G such that, ideally, the pre-conditioned system in equation (9) and the original system in equation (3) approximate the same solution. Similarly, the problem can also be pre-conditioned from the right using

The iterative solver is then used to find an intermediate solution  , from which the final solution

, from which the final solution  can be derived in the source domain by applying the right pre-conditioner B. In addition, one can combine both approaches to pre-condition the problem from two sides via split or central pre-conditioning

can be derived in the source domain by applying the right pre-conditioner B. In addition, one can combine both approaches to pre-condition the problem from two sides via split or central pre-conditioning

where the central pre-conditioner becomes M=A B. Which pre-conditioner to use mainly depends on the inverse problem setup and the chosen iterative solver. Provided that suitable pre-conditioners can be found, these modifications can mitigate ill-posedness and accelerate convergence of the used minimization algorithm.

3.2 Traditional solvers

The recent literature provides a variety of established solvers to equation (7) that are all based on different regularization strategies to address the ill-posedness of the problem. These solvers typically aim to minimize equation (7) for a least-squares model mismatch  under individual constraints ℛ(Kq) that geometrically shape the solution according to available prior knowledge. The neural solver to be proposed in Section 4.1 is benchmarked against a reasonable selection of established solvers that have proven effective for sound source retrieval. The benchmarks include the classical Least Norm (LN) [11] and Compressive Sensing (CS) [12–16] solutions, as well as the ones obtained from Total Variation (TV) [25, 33, 74] and its generalization (TGV) [25, 33, 75].

under individual constraints ℛ(Kq) that geometrically shape the solution according to available prior knowledge. The neural solver to be proposed in Section 4.1 is benchmarked against a reasonable selection of established solvers that have proven effective for sound source retrieval. The benchmarks include the classical Least Norm (LN) [11] and Compressive Sensing (CS) [12–16] solutions, as well as the ones obtained from Total Variation (TV) [25, 33, 74] and its generalization (TGV) [25, 33, 75].

Least Norm (LN)

For the LN solution, the problem in equation (7) is solved by means of a Tikhonov-regularized [11] right inverse

which corresponds to seeking a smooth estimate with minimum energy by use of the λ-weighted  -norm regularizer

-norm regularizer  .

.

The LN solution is closely related to the Truncated Singular Value Decomposition (TSVD), which can be robustly computed without matrix inversion as

where  and

and  are unitary matrices whose columns are the left and right singular vectors

are unitary matrices whose columns are the left and right singular vectors  and

and  , respectively, and the diagonal matrix

, respectively, and the diagonal matrix  contains the singular values

contains the singular values  of the propagator G.

of the propagator G.

The regularization parameter λ is chosen in a way that the evanescent or weakly propagating components of G associated with vanishing singular values  are either smoothed for LN, or discarded for TSVD, in order to prevent noise amplification due to their inversion.

are either smoothed for LN, or discarded for TSVD, in order to prevent noise amplification due to their inversion.

In their essence, both LN and TSVD are spectral filtering methods based on soft or hard filtering of weak high-frequency components, respectively, which are especially suited for the retrieval of smooth vibration patterns.

Compressive Sensing (CS)

CS [12–16], on the other hand, seeks the sparse solution  with minimal ℓ1-norm, comprised of only few non-zero coefficients. The corresponding minimization problem involving the regularizer ℛ(Kq)=||q||1 lacks a closed-form solution and must be solved by non-linear convex optimization algorithms.

with minimal ℓ1-norm, comprised of only few non-zero coefficients. The corresponding minimization problem involving the regularizer ℛ(Kq)=||q||1 lacks a closed-form solution and must be solved by non-linear convex optimization algorithms.

Total (Generalized) Variation (TV/TGV)

Both the LN and CS solutions are considered synthesis priors as they imply desired spatial structures that are directly imposed in the spatial domain (K=I). Alternatively, structural constraints can also be imposed on some transformed domain by analysis priors. A promising choice is to penalize spatial derivatives as they characterize evolution and curvature of the solution in the spatial domain.

In particular, the enforcement of sparse first-order derivatives of the gradient (K=∇=∂x+∂y) by TV [25, 33, 74], which promotes piecewise-constant solutions, has proven effective in modeling uniform in-phase vibrations. Similarly, enforcing sparse second-order derivatives of the Laplacian (K=Δ=∂xx+∂yy) by TGV [25, 33, 75] yields piecewise-linear solutions with minimum curvature, which is well suited for modeling continuous variations as an appealing alternative to LN.

By sparsifying spatial derivatives, TV and TGV tend to produce spatially extended source patterns, which makes them unsuited for the reconstruction of spatially compact sound sources. However, the fusion of TV and TGV with an additional μ-weighted CS constraint for spatial sparsity extends their applicability to compact sources by promoting block-sparse solutions, where non-zero coefficients are not necessarily sparse, but spatially grouped together [25, 33]. In this study, these fused constraints are termed CS-TV and CS-TGV, respectively. The associated regularizers are ℛ(Kq)=||[∇,μI]Tq||1 for CS-TV, and ℛ(Kq)=||[Δ,μI]Tq||1 for CS-TGV, where the weight μ controls the level of spatial sparsity.

4 Neural solvers

Recently, quite some effort has been put into the development of physics-guided neural architectures based on unrolled iterative optimizers. The idea is to design a neural network by unrolling a limited number of iterations of some optimization algorithm that iteratively approximates the solution to a variational problem using prior knowledge. Hereby, each iteration represents one network layer comprised of fixed or learnable update steps that ensure data consistency or act as regularization. Following this concept, Section 4.1 proposes to solve the NAH task with a physics-constrained Variational Network (VN), a term first introduced by Kobler et al. [63] to linguistically capture the close theoretical connection between variational methods and neural networks that guides its architectural design. In particular, the VN architecture is derived from the generalized gradient descent method in equation (8) that solves the variational problem in equation (7) to retrieve the optimal strengths of an equivalent source distribution from ill-posed measurements.

The Point Neuron Network (PNN) [54] is another neural approach to NAH with a physics-guided architecture based on equivalent sources that is closely related to the proposed VN and thus lends itself as a useful additional benchmark; its concept is briefly reviewed in Section 4.2.

4.1 Proposed physics-constrained neural solver based on optimizer unrolling

A generalization of the regularization term in equation (7) is the Fields of Experts (FoE) model [68]

which comprises a linear combination of Nℛ regularizers based on independent linear transformations Ki and scalar constraints  to penalize unfavorable structures in different solution domains with high output values. Instead of using problem-specific, handcrafted transformations Ki and penalty functions ℛi(·), and thereby relying on expert knowledge or assumptions, the FoE model learns parametrized variants thereof from training data.

to penalize unfavorable structures in different solution domains with high output values. Instead of using problem-specific, handcrafted transformations Ki and penalty functions ℛi(·), and thereby relying on expert knowledge or assumptions, the FoE model learns parametrized variants thereof from training data.

Under the assumption of Additive White Gaussian Noise (AWGN), the model mismatch in equation (7) is commonly chosen to be a least-squares objective  , with

, with  being the

being the  -norm. For x=y=2, it defines a convex quadratic function with a unique global minimum. Relaxing this assumption enables a generalization of the model mismatch [69, 76–78] to a set of

-norm. For x=y=2, it defines a convex quadratic function with a unique global minimum. Relaxing this assumption enables a generalization of the model mismatch [69, 76–78] to a set of  scalar mismatch functions

scalar mismatch functions  that may as well be parametrized and learned from data. The introduction of learnable split pre-conditioners Ak and Bk as in equation (11) that operate on the field and source domain, respectively, further increases flexibility and may simplify the problem to provide faster and more stable convergence. This yields the generalized model mismatch

that may as well be parametrized and learned from data. The introduction of learnable split pre-conditioners Ak and Bk as in equation (11) that operate on the field and source domain, respectively, further increases flexibility and may simplify the problem to provide faster and more stable convergence. This yields the generalized model mismatch

Since each summand in equation (15) quantifies an independently pre-conditioned problem, it becomes impractical to minimize the sum total model mismatch w.r.t. an intermediate substitute  from which the final solution

from which the final solution  can be derived as in equation (11), as this requires the inverse problem to be conditioned by a single right pre-conditioner B. Instead, the independent right pre-conditioners Bk are introduced as supplementary domain transformations that provide additional opportunities to learn implicit features from data as in [77]. The use of adaptive mismatch functions and pre-conditioning is supported by ablation studies [76, 78] showing improved reconstruction accuracy due to extended degrees of freedom in data fidelity. This is also backed by other studies of unrolled optimizers [79] that report a substantial performance increase from apt preconditioning, while allowing for a reduced model size and faster training.

can be derived as in equation (11), as this requires the inverse problem to be conditioned by a single right pre-conditioner B. Instead, the independent right pre-conditioners Bk are introduced as supplementary domain transformations that provide additional opportunities to learn implicit features from data as in [77]. The use of adaptive mismatch functions and pre-conditioning is supported by ablation studies [76, 78] showing improved reconstruction accuracy due to extended degrees of freedom in data fidelity. This is also backed by other studies of unrolled optimizers [79] that report a substantial performance increase from apt preconditioning, while allowing for a reduced model size and faster training.

Embedding the FoE regularizer in equation (14) and the generalized model mismatch in equation (15) within the variational framework in equation (7) yields

which, under the premise that all functions are continuously differentiable and convex, can be iteratively minimized by gradient descent in equation (8), performing the update

for the iteration index t≥0, given some initial q0.

Proposed Variational Network (VN)

Additional regularization is accomplished by early stopping [72], i.e. truncating the optimization scheme after a pre-defined number of iterations t=T. The use of information from earlier iterates stored in persistent memory [80, 81] is a common means to improve computational efficiency. In particular, the introduction of momentum [82] as a weighted running average of past gradients can further improve estimation accuracy and accelerate convergence by naturally introducing skip connections that improve gradient flow through the network, which has been verified by ablation studies [76, 78]. Unrolling the finite update sequence and letting all adjustable parameters, i.e. domain transformations, penalty functions, trade-off weights, and step-sizes vary across iterations t for maximum flexibility, yields the modified VN architecture [63, 64, 66, 67]

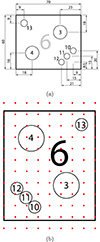

for 0≤t≤T, as shown in Figure 2a, composed of the data fidelity or model mismatch network

|

Figure 2. (a) The Variational Network (VN) architecture corresponding to equation (18), comprised of (b) the model mismatch network defined by equation (19), (c) the regularizer network defined by equation (20), and (d) the momentum network defined by equation (21). |

in Figure 2b, the regularizer network

in Figure 2c, and the momentum network

with learnable momentum parameters  and

and  in Figure 2d, where the momentum index 0≤l≤t−1 runs from the initial up to the current iteration.

in Figure 2d, where the momentum index 0≤l≤t−1 runs from the initial up to the current iteration.

The variant step size τt and regularization weight λt are embedded within the scaling of the gradients for brevity. Passing through the network is equivalent to executing the iterative algorithm a finite number of T times. The algorithm parameters naturally transfer to the network weights θ that are either fixed or learned from training data. This provides the freedom to decide which parameters are best to be extracted from data and which are better modeled according to prior knowledge.

The linear transformations Ki, Ak, and Bk are regarded to be sparse and convolutional, and can thus be realized as complex-valued 2D convolutions over (x,y) with corresponding filter kernels  ,

,  , and

, and  of uniform size d, i.e. Kiq⇔ki*q, Akp⇔ak*p and Bkq⇔bk*q. The propagator G is convolutional by nature and for a planar geometry it is either implemented as a linear layer by direct application of the convolutional matrix G, or as 2D convolution with a fixed Green's function kernel g, i.e. Gq⇔g*q. Under consideration of appropriate boundary conditions and padding [65, 66], the Hermitian transposed operators GH,

of uniform size d, i.e. Kiq⇔ki*q, Akp⇔ak*p and Bkq⇔bk*q. The propagator G is convolutional by nature and for a planar geometry it is either implemented as a linear layer by direct application of the convolutional matrix G, or as 2D convolution with a fixed Green's function kernel g, i.e. Gq⇔g*q. Under consideration of appropriate boundary conditions and padding [65, 66], the Hermitian transposed operators GH,  ,

,  , and

, and  can be implemented as complex-valued 2D convolutions with 180°-rotated conjugate filter kernels

can be implemented as complex-valued 2D convolutions with 180°-rotated conjugate filter kernels  ,

,  ,

,  and

and  , respectively, i.e.

, respectively, i.e.  ,

,  ,

,  , and

, and  . In literature, this operation is often misleadingly referred to as deconvolution, where the overline

. In literature, this operation is often misleadingly referred to as deconvolution, where the overline  indicates kernel rotation. The respective first-order derivatives of the penalty and mismatch functions

indicates kernel rotation. The respective first-order derivatives of the penalty and mismatch functions  and

and  act as the activation functions of the network and are applied element-wise on each component of their input arguments.

act as the activation functions of the network and are applied element-wise on each component of their input arguments.

The unrolled optimization scheme can be considered a T-layer neural network, where each iteration represents one composite layer comprised of residual Convolutional Neural Networks (CNNs) with skip connections; this structural similarity is highlighted in Figure 1 for a simplified VN based on a single model mismatch and regularization cost for sake of clarity. The model mismatch network  and regularizer network

and regularizer network  share a similar structure resembling a symmetric multi-layer CNN with regular and transposed convolutional input and output layers, respectively, that are connected through a tuneable activation layer introducing non-linearity.

share a similar structure resembling a symmetric multi-layer CNN with regular and transposed convolutional input and output layers, respectively, that are connected through a tuneable activation layer introducing non-linearity.

In the regularizer network  , the convolutional layers

, the convolutional layers  and

and  share the same set of Nk linear filters

share the same set of Nk linear filters  (as

(as  ) that are learned from data and vary within and across layers. The same holds for the left and right pre-conditioners

) that are learned from data and vary within and across layers. The same holds for the left and right pre-conditioners  and

and  , and their respective Hermitian conjugates

, and their respective Hermitian conjugates  and

and  , in the model mismatch network

, in the model mismatch network  . In contrast, the convolutional layers G and GH use a fixed filter kernel g that is shared across all layers, which is the physics-guided aspect of the network architecture that implements the physics of wave propagation. Moreover, a problem-dependent bias term p in the input layer of the model mismatch network ensures consistency of the solution w.r.t. the measurements.

. In contrast, the convolutional layers G and GH use a fixed filter kernel g that is shared across all layers, which is the physics-guided aspect of the network architecture that implements the physics of wave propagation. Moreover, a problem-dependent bias term p in the input layer of the model mismatch network ensures consistency of the solution w.r.t. the measurements.

4.2 Point Neuron Network (PNN)

The Point Neuron Network (PNN) proposed in [54] implicitly satisfies the wave equation, as its fundamental solution, the free-space Green's function in equation (2), is directly embedded into the architectural design. In its essence, the PNN is closely related to the ESM, in that it models an arbitrary sound field p by superimposing the sound radiation of N distributed equivalent sources, termed point neurons, whose complex-valued strengths qn and physical locations r0,n=[x0,n,y0,n,z0,n]T are encoded in the neuronal weights wn and biases bn=[x0,n,y0,n,z0,n]T, respectively, and whose optimal values are inferred from a single set of sound field measurements p via unsupervised learning.

The PNN describes the sound pressure at rm as

which strongly reminds of equation (1) and highlights the analogy wn=jωρ q(r0,n). In contrast to the ESM, the PNN uses a normalized Green's function

to relate a point neuron located at bn to its radiated sound pressure at rm, where the normalization factor

enables modeling of both near- and far-field propagation. However, as this study is mainly concerned with NAH, the normalization factor is readily omitted with χ=1, reducing PN(·) in equation (23) to the Green's function G(·) in equation (2), such that the PNN is governed by the same fundamental equations (1)–(3) as the ESM.

5 Experimental setup

5.1 Setup of traditional solvers

The regularization parameter λ in equation (7) is manually chosen for each method according to the simple, yet effective criterion [29]

which has empirically shown to be a reliable ad-hoc choice for good performance of the solvers in Section 3.2, with option for further fine-tuning. The parameter choice method aims at rejecting singular values of G far below its largest singular value smax for reconstruction, based on an estimate of the given Signal-to-Noise Ratio SNR. The hyper-parameters of the traditional solvers are carefully tuned to optimally fit each source type within their expressive limits. Note that, although the VN is only trained once on a set of representative training data and is then expected to generalize over all test cases, the truncation parameter of its initializer in equation (31) is also set by equation (25) to provide a faithful starting point.

Following the convention in the paper of Fernandez-Grande and Daudet [25], the weight that controls the level of sparsity in the fused CS-TV and CS-TGV constraints described in Section 3.2 is set to μ=1, which offers a good trade-off between spatial and differential sparsity.

In this study, the Fast Iterative Shrinkage-Thresholding Algorithm (FISTA) is used to implement CS, and the Condat-Vu algorithm [83, 84], which is a generalization of the Primal-Dual Hybrid Gradient or Chambolle-Pock method [85], is used to implement TV and its variants. The LN solution requires no iterative approximation but is derived in closed form as in equation (12).

For the TV variants, the first- and second-order spatial derivatives in the gradient and Laplacian are discretized via forward finite differences and implemented as convolutions with 3-point and 5-point stencils, respectively, and zero boundary conditions are used, which consistently provided the best results for all test sources.

5.2 Setup and training of proposed VN

The VN in this study is made up of T=10 layers, resulting in a total of 167 190 learnable complex-valued network parameters  . Table 1 shows the number and dimensionality of the learnable parameters for each VN layer t.

. Table 1 shows the number and dimensionality of the learnable parameters for each VN layer t.

Number and dimensionality of the learnable complex-valued parameters  of each VN layer t.

of each VN layer t.

Convolutional filters

There are many ways to implement the convolutional operations within the network layers (here exemplary shown for the regularizer network). One can treat the complex-valued input q as real-valued entity with twice the size by stacking the magnitude and phase, or real and imaginary parts. Both dimensions can then be separately convolved with either shared [76] or distinct real-valued kernels [64], with the latter doubling the number of parameters. This study, however, adopts the more natural approach of complex convolutions of the input q with complex-valued kernels  s.t.

s.t.

which have been shown to provide quantitative improvements over the split-complex variants [56, 86]. This operation can be performed by a real-valued convolutional layer with two two-channeled kernels ![$ k_1 = [k_{\mathrm {re}},-k_{\mathrm {im}}]\in {\mathbb {R}}^{d\times d\times 2} $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq111.gif) and

and ![$ k_2 = [k_{\mathrm {im}},k_{\mathrm {re}}]\in {\mathbb {R}}^{d\times d\times 2} $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq112.gif) on the split input

on the split input ![$ q = [q_{\mathrm {re}}, q_{\mathrm {im}}]\in {\mathbb {R}}^{N_y \times N_x\times 2} $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq113.gif) , resulting in the output

, resulting in the output ![$ [(k_{\mathrm {re}}\ast q_{\mathrm {re}} - k_{\mathrm {im}}\ast q_{\mathrm {im}}), (k_{\mathrm {re}}\ast q_{\mathrm {im}} + k_{\mathrm {im}}\ast q_{\mathrm {re}})]\in {\mathbb {R}}^{N_y \times N_x\times 2} $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq114.gif) from which k*q can be easily assembled [87]. Note that, while keeping the total number of parameters unchanged, complex convolution yields twice as many convolutions as their real-valued counterpart, and thus significantly increases training time. Zero boundary conditions are used to match the conditions imposed on the traditional solvers TV and its variants that rely on convolutions to compute the spatial derivatives.

from which k*q can be easily assembled [87]. Note that, while keeping the total number of parameters unchanged, complex convolution yields twice as many convolutions as their real-valued counterpart, and thus significantly increases training time. Zero boundary conditions are used to match the conditions imposed on the traditional solvers TV and its variants that rely on convolutions to compute the spatial derivatives.

To enhance the expressiveness of the regularizer network and align its structure to the model mismatch network, its convolutional transformation is factorized by two independent transformations K and L, s.t.

The outer convolutional layers, i.e. Li and  in the regularizer network, and Bk and

in the regularizer network, and Bk and  in the model mismatch network, use sets of Nℛ=32 and

in the model mismatch network, use sets of Nℛ=32 and  filter kernels of uniform size dl=db=5, respectively. While the regular kernels Lk and Bk expand the single-channel input into a Nℛ- and

filter kernels of uniform size dl=db=5, respectively. While the regular kernels Lk and Bk expand the single-channel input into a Nℛ- and  -dimensional feature space, respectively, the transposed kernels

-dimensional feature space, respectively, the transposed kernels  and

and  eventually compress the multi-channel features back into a single source estimate. The inner convolutional layers, i.e. Ki and

eventually compress the multi-channel features back into a single source estimate. The inner convolutional layers, i.e. Ki and  in the regularizer network, and Ak and

in the regularizer network, and Ak and  in the model mismatch network, operate on the feature space by applying different sets of Nℛ=32 and

in the model mismatch network, operate on the feature space by applying different sets of Nℛ=32 and  filter kernels of uniform size dk=da=3 to each extracted feature, respectively, thereby sharing knowledge across channels. Additionally, a least-squares gradient step τtGH(Gqt−p) with trainable scalar step-size τt is used in every VN layer, where left and right pre-conditioners reduce to the identity B=A=I and the activation turns linear

filter kernels of uniform size dk=da=3 to each extracted feature, respectively, thereby sharing knowledge across channels. Additionally, a least-squares gradient step τtGH(Gqt−p) with trainable scalar step-size τt is used in every VN layer, where left and right pre-conditioners reduce to the identity B=A=I and the activation turns linear  , which was found to stabilize training. The output layers

, which was found to stabilize training. The output layers  and

and  are depth-wise separable such that their individual feature maps representing gradients can be separately accessed by the momentum network, where they are re-weighted by the scalars

are depth-wise separable such that their individual feature maps representing gradients can be separately accessed by the momentum network, where they are re-weighted by the scalars  and

and  before finally being summed up.

before finally being summed up.

Activation functions

Here, potential functions  and ℛ(·) and activations

and ℛ(·) and activations  and ℛ′(·) are respectively represented by ρ(·) and ϕ(·):ρ′(·) for brevity. Learning the activations ϕ(·) demands differentiable parametrizations that account for the conditions imposed on their associated potential functions ρ(·). Optimization-based NAH typically relies on convex ℓp-norm-based (i.e. p≥1) scalar potential functions

and ℛ′(·) are respectively represented by ρ(·) and ϕ(·):ρ′(·) for brevity. Learning the activations ϕ(·) demands differentiable parametrizations that account for the conditions imposed on their associated potential functions ρ(·). Optimization-based NAH typically relies on convex ℓp-norm-based (i.e. p≥1) scalar potential functions  that define the cost to be minimized with each iteration, and their associated derivatives

that define the cost to be minimized with each iteration, and their associated derivatives  are monotonically increasing vector functions that apply element-wise on their complex-valued argument. However, the aim of the VN is not the successive minimization of a cost, but rather learning a generalized transformation of inputs to desired targets, which allows for relaxation of the convexity constraint, and permits the use of more expressive mappings.

are monotonically increasing vector functions that apply element-wise on their complex-valued argument. However, the aim of the VN is not the successive minimization of a cost, but rather learning a generalized transformation of inputs to desired targets, which allows for relaxation of the convexity constraint, and permits the use of more expressive mappings.

A split-complex approach is often adopted to process the complex-valued input, where activations of real-valued argument  with individual learnable parameters are separately applied to magnitude and phase, or real and imaginary parts. This study, however, considers the use of activations of complex-valued argument and output

with individual learnable parameters are separately applied to magnitude and phase, or real and imaginary parts. This study, however, considers the use of activations of complex-valued argument and output  [56, 88, 89].

[56, 88, 89].

The activations are applied element-wise ![$ \phi _i^t({{\mathbf {q}}})=[\phi _i^t(q_1), \phi _i^t(q_2), \ldots , \phi _i^t(q_N)]^T $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq136.gif) and are realized as parametric interpolators

and are realized as parametric interpolators

using affine combinations of Nw Radial Basis Functions (RBFs) φj(·) with learnable complex-valued weights  and a single bias

and a single bias  [63–66] per layer t and instance i, which can be written in matrix notation as

[63–66] per layer t and instance i, which can be written in matrix notation as

Gaussian kernels [90]

are a popular choice for approximating arbitrary functions due to their radial symmetry, smoothness and universal properties [91], and they naturally extend to complex-valued inputs [88]. Here  is the center of the j-th Gaussian that lies on the complex plane, and

is the center of the j-th Gaussian that lies on the complex plane, and  is the real-valued standard deviation that controls its circularly symmetric spread. Note that, although the proposed activations naturally process complex-valued data, the exponential function within the Gaussian kernels in equation (30) still maps to real values

is the real-valued standard deviation that controls its circularly symmetric spread. Note that, although the proposed activations naturally process complex-valued data, the exponential function within the Gaussian kernels in equation (30) still maps to real values  , which results in real-valued gradients that can negatively affect phase approximation [89]. Alternative Gaussian-like kernels from the class of elementary transcendental functions that provide fully complex mappings

, which results in real-valued gradients that can negatively affect phase approximation [89]. Alternative Gaussian-like kernels from the class of elementary transcendental functions that provide fully complex mappings  with complex-valued gradients, such as the hyperbolic secant [89], were also initially considered for more accurate phase retrieval, but were eventually discarded, as their isolated singularities make training prone to instability and divergence.

with complex-valued gradients, such as the hyperbolic secant [89], were also initially considered for more accurate phase retrieval, but were eventually discarded, as their isolated singularities make training prone to instability and divergence.

To optimally cover the input range, RBF centers μj should be equally distributed over an area spanned by the extrema of the complex-valued filter responses (i.e. minimum and maximum real and imaginary parts of  for the regularizer network and

for the regularizer network and  for the model mismatch network). This is somewhat problematic as they vary across instances i or k, layers t, and training batches, due to constantly changing filter and input statistics – the internal covariate shift [92] – which calls for standardization. Instance normalization [93] has proven effective to stabilize real-valued inputs by forcing channels, which represent the individual inputs of each RBF activation, to follow a standard normal distribution

for the model mismatch network). This is somewhat problematic as they vary across instances i or k, layers t, and training batches, due to constantly changing filter and input statistics – the internal covariate shift [92] – which calls for standardization. Instance normalization [93] has proven effective to stabilize real-valued inputs by forcing channels, which represent the individual inputs of each RBF activation, to follow a standard normal distribution  with zero mean μ and unit variance σ2 trough channel-wise translation and scaling. In case of complex-valued inputs, one could use a naive implementation, applying instance normalization separately on real and imaginary parts. However, this transformation is not sufficient for the input to follow a standard complex normal distribution

with zero mean μ and unit variance σ2 trough channel-wise translation and scaling. In case of complex-valued inputs, one could use a naive implementation, applying instance normalization separately on real and imaginary parts. However, this transformation is not sufficient for the input to follow a standard complex normal distribution  , with zero mean μ and relation C and unit covariance Γ, as it does not ensure equal variance in both real and imaginary parts and potentially causes the distribution to lose its circular symmetry or become eccentric [94, 95]. A 2D whitening operation was proposed [94] to avoid this problem by directly rescaling the covariance and hereby ensuring circular symmetry of the resulting distribution, which eases adequate sampling of the complex plane with fixed RBF patterns. This is why 2D whitening was adopted, here.

, with zero mean μ and relation C and unit covariance Γ, as it does not ensure equal variance in both real and imaginary parts and potentially causes the distribution to lose its circular symmetry or become eccentric [94, 95]. A 2D whitening operation was proposed [94] to avoid this problem by directly rescaling the covariance and hereby ensuring circular symmetry of the resulting distribution, which eases adequate sampling of the complex plane with fixed RBF patterns. This is why 2D whitening was adopted, here.

The Nw=64 RBF centers μj are arranged in a sunflower seed pattern [96] that evenly samples the circularly-symmetric input distribution. The spread of the RBFs is derived from the k-nearest neighbors heuristic [97], with the standard deviations σj of the Gaussians chosen as the minimal distance of each center μj to its k=3 nearest centers. While all RBF weights  and biases

and biases  are learned, the centers μj and standard deviations σj of the Gaussian kernels remain fixed to ensure sufficient resolution in all regions of the complex plane throughout the training process.

are learned, the centers μj and standard deviations σj of the Gaussian kernels remain fixed to ensure sufficient resolution in all regions of the complex plane throughout the training process.

Initialization

The VN is typically “warm-started” from an initial guess q0 that closely approximates the ground truth based on some inverse transformation of the measurements [81]. This study exploits a right inverse initializer regularized by the TSVD in equation (13). However, following the idea of Schwab et al. [98], the TSVD solution  is extended in a data-driven manner by replacing the truncated singular values

is extended in a data-driven manner by replacing the truncated singular values  with non-zero components learned by a neural network

with non-zero components learned by a neural network  that are subsequently projected onto the singular vectors that correspond to these truncated singular values, as

that are subsequently projected onto the singular vectors that correspond to these truncated singular values, as

Numerical studies [98] prove the superiority of this data-driven continued TSVD q0 over the classical TSVD solution  and justify its use as initializer for the VN to provide a best possible physics-guided starting point. Originally, the data-driven substitute is derived from the TSVD approximation by

and justify its use as initializer for the VN to provide a best possible physics-guided starting point. Originally, the data-driven substitute is derived from the TSVD approximation by  , which is likely suboptimal as

, which is likely suboptimal as  lacks information about the weakly propagating components one aims to compensate for. In this study, the substitute

lacks information about the weakly propagating components one aims to compensate for. In this study, the substitute  based on the raw measurements p is used, as in equation (31), which still holds information about the evanescent field and is therefore considered a more faithful choice. Moreover, the measurements are typically lower-dimensional than the equivalent sources (M<N), what makes the latter approach more efficient by reducing the number of required parameters and computations in

based on the raw measurements p is used, as in equation (31), which still holds information about the evanescent field and is therefore considered a more faithful choice. Moreover, the measurements are typically lower-dimensional than the equivalent sources (M<N), what makes the latter approach more efficient by reducing the number of required parameters and computations in  . In this study,

. In this study,  represents a simple 3-layer CNN, where each layer uses a set of

represents a simple 3-layer CNN, where each layer uses a set of  filter kernels u of uniform size du=5 and RBF activations with Nw=64 Gaussians.

filter kernels u of uniform size du=5 and RBF activations with Nw=64 Gaussians.

The initial filter and activation weights and biases of the VN are randomly sampled from the complex-valued Kaiming uniform distribution.

Training dataset and augmentation strategies

The network is trained on a randomized supervised training set  of

of  matched source-field pairs

matched source-field pairs  sampled from the joint distribution of reasonable combinations under the given model

sampled from the joint distribution of reasonable combinations under the given model  . The network

. The network  is fed with random batches

is fed with random batches  , i.e. portions of the training set, of Nℬ measurement samples

, i.e. portions of the training set, of Nℬ measurement samples  and related sound field models

and related sound field models  from which it infers source estimates

from which it infers source estimates  that are finally compared to the associated ground truth sources

that are finally compared to the associated ground truth sources  by some loss function ℒ(·) providing a reconstruction error to be minimized.

by some loss function ℒ(·) providing a reconstruction error to be minimized.

The training data is generated on the fly. It comprises extended and compact source patterns that are fully randomized. Extended vibrational patterns are exclusively created by infinite plates with random material properties that are either resonating or excited by various loads (e.g. point, circle, ring, square) and forces (e.g. uniform, alternating) acting at different locations and frequencies. The linear combination of these simple sources at different amplitudes and phases results in more complex patterns of wave interference. Parametric 2D window functions (e.g. Kaiser, Tukey) with randomized shape parameters simulate different boundary conditions to mimic finite plates. Additionally, compact sources are represented by randomly distributed monopoles. Note that piecewise-constant source strength distributions, such as pistons, are not considered as part of the training data, which keeps the training set small. In Section 6.2, this restriction turns out to be uncritical due to good generalization.

The associated sound field to each source is obtained by application of the forward model G. Networks trained on a specific model setup do not generalize well over different forward models. This is particularly true for changes in measurement distance r, which affect both the exponent and the denominator of the Green's functions in equation (2) contained in the matrix G of equation (3). Training the network on varying forward models Gi, including variation in distance ri and frequency fi, helps to provide generalized information about the physics of wave propagation, which in turn improves regularization. The training data covers the whole frequency range of interest between 20 Hz≤fi≤5 kHz, which exceeds the spatial sampling limit of the equi-spaced array geometry, on purpose. The radiated sound field is evaluated at random distances 1≤zi≤10 cm within the nearfield, to consider different amounts of evanescent waves in the measurements. Furthermore, complex-valued AWGN  with varying SNRi between 25≤SNRi≤50 dB is added to the measurements during training to enforce implicit denoising for more robust retrieval. A varying number of microphones 6≤(Mx,My)≤12 and equivalent sources 21≤(Nx,Ny)≤41 in equation (3) further accounts for different levels of ill-conditioning during training. The introduced randomness acts as data augmentation by exposing the network to a larger variety of source-field pairs without actually increasing the training set of sound sources, hereby preventing the network from overfitting.

with varying SNRi between 25≤SNRi≤50 dB is added to the measurements during training to enforce implicit denoising for more robust retrieval. A varying number of microphones 6≤(Mx,My)≤12 and equivalent sources 21≤(Nx,Ny)≤41 in equation (3) further accounts for different levels of ill-conditioning during training. The introduced randomness acts as data augmentation by exposing the network to a larger variety of source-field pairs without actually increasing the training set of sound sources, hereby preventing the network from overfitting.

Note that, although the network is trained on different sampling densities and measurement distances, equivalent sources and microphones must be kept aligned on parallel planes following regular sampling patterns. Although equivalent sources can theoretically adapt to irregular microphone array geometries and sound sources of arbitrary shapes and orientations (see PNN in Sect. 4.2), it is impractical to learn their optimal setup from supervised data. While some guidelines exist, there is no universal rule for optimizing the number and position of equivalent sources, which makes it difficult to decide on their specific setup for the target reference in training. For this reason, training data does not cover volumetric structures and is rather limited to planar radiators that are discretized on uniform grids of sufficient resolution. Moreover, the inherent structure of the network and training practically limits its use to planar measurement and source structures, as the network heavily relies on 2D convolutions that expect their inputs to have a regular uniform sampling pattern on a planar surface, where each sampling point has a fixed spatial relationship to its neighbors.

Loss and optimizer

Training of deeper networks benefits from layer-wise pre-training, which fits each individual layer output  for 0≤t≤T to the ground truths

for 0≤t≤T to the ground truths  prior to joint training of all layers. The first few layers are then expected to already be close to the optimum, providing good initialization for subsequent fine-tuning [66]. A more integrated approach to pre-training linearly combines layer-wise loss functions and gradually reduces their contribution to the total loss over time according to some regularizing schedule, eventually only leaving the overall loss of the final output layer t=T. Minimizing the exponentially-weighted loss function [76, 77]

prior to joint training of all layers. The first few layers are then expected to already be close to the optimum, providing good initialization for subsequent fine-tuning [66]. A more integrated approach to pre-training linearly combines layer-wise loss functions and gradually reduces their contribution to the total loss over time according to some regularizing schedule, eventually only leaving the overall loss of the final output layer t=T. Minimizing the exponentially-weighted loss function [76, 77]

has proven effective, where the schedule weight κ0·i≥0, controlling layer penalization, is set to increase with iterations. The initial schedule weight is set to κ0=1e−3. This layer-wise loss function yields faster and stabler convergence by reducing gradient variance across layers, and it significantly reduces the risk of getting trapped in bad local optima. However, it does not increase model complexity and thus not improves reconstruction accuracy, as shown by ablation studies [76].

For the loss ℒ(·) in equation (32) essentially a combined ℓ1-norm is used to summarize the mismatch between magnitude as well as real part and imaginary part between the true and estimated source distribution, as described below.

Additionally, one aspect needs to be considered: In the pursuit of minimizing this loss for sparse sources, the VN tends to drive all equivalent sources to zero early in training instead of concentrating the source strength at the origin of the sound. As CNNs are known to be biased towards smooth solutions before learning fine-grained details and sparse patterns, they yield huge reconstruction errors for point sources at the early stages of training. Simply zeroing the output seems to be an easier task and minimizes the loss faster than concentrating the energy spread in only a few active coefficients, and once the output is zeroed, point sources cannot be recovered later in training. As a remedy, the Normalized Cross Correlation (NCC)

is used as an auxiliary loss to capture the similarity between estimate and ground truth while compensating for local variations induced by scaling and bias. This hinders the suppression of all source activity by preserving structural similarity. Since ϵNCC=1 indicates a perfect match, the resulting loss becomes

and is used for the layer-wise loss in equation (32), which is iteratively minimized until convergence for 100 k iterations in batches of Nℬ=8 samples using the Nesterov-accelerated Adaptive Moment Estimation (NAdam) optimizer. A scheduler reduces the initial learning rate ζ=1e−3 whenever training plateaus, and gradient clipping is used to stabilize convergence. Once the network is trained, the learned parameters  are fixed, and new, unseen measurements of similar nature can be fed to the network

are fixed, and new, unseen measurements of similar nature can be fed to the network  that then serves as optimal reconstruction operator for data-driven source retrieval.

that then serves as optimal reconstruction operator for data-driven source retrieval.

5.3 Setup of PNN

Instead of relying on supervised training on labeled datasets as the VN, the PNN follows the paradigm of unsupervised “one-shot” learning by directly fitting the model to a single set of array measurements. Such unsupervised methods [99–107] can be more efficient and become appealing when data is scarce, which is common for large-scale microphone array systems, but they are typically more prone to overfitting to measurement noise. Different strategies to mitigate overfitting have been proposed, including early stopping [100], the use of underparametrized network architectures [99, 107], and additional denoising regularizers [103, 104]. The PNN relies on the use of weight regularization, using a sparsifying model complexity loss [54], combined with early stopping [72]. For simulation experiments, where the ground truth of the source is available, training is stopped as soon as the ϵRMSE between the estimated and true source distribution starts to monotonically increase, which indicates overfitting.

During training, the model parameters ![$ [{{\mathbf {w}}}, {{\mathbf {B}}}]=[w_0,\ldots ,w_N, {{\mathbf {b}}}_0,\ldots ,{{\mathbf {b}}}_N]\in {\mathbb {C}}^{4N} $](/articles/aacus/full_html/2025/01/aacus240143/aacus240143-eq181.gif) , and thereby the strengths wn=qn and locations bn=r0,n=[x0,n,y0,n,z0,n]T of the equivalent sources, are iteratively updated by backpropagation, minimizing the regularized loss function

, and thereby the strengths wn=qn and locations bn=r0,n=[x0,n,y0,n,z0,n]T of the equivalent sources, are iteratively updated by backpropagation, minimizing the regularized loss function

comprised of the least-squares model mismatch  and a spatial sparsity constraint ψ||w||1 as the model complexity loss that controls the number of active equivalent sources to avoid overfitting to the noisy measurements p.

and a spatial sparsity constraint ψ||w||1 as the model complexity loss that controls the number of active equivalent sources to avoid overfitting to the noisy measurements p.

In light of the ESM, the minimization problem in equation (35) fits in the variational framework of equation (7) under CS constraints as described in Section 3.2, with the main difference being the trainable locations of the equivalent sources B, which act as kind of regularization, and the use of backpropagation instead of classical gradient descent to update the model parameters.

Initialization

The authors of [54] suggest the initial weights w0 and biases B0 of the PNN, that respectively represent strengths and distribution of the equivalent sources, to be determined based on prior knowledge about the underlying physical scenario and model parametrization, as this speeds up convergence and avoids getting trapped in local minima. Moreover, equivalent sources must neither be placed on the source surface nor the measurement points, as otherwise gradients for backpropagation become infinity. Following these suggestions, the equivalent sources are equidistantly placed just beneath the source surface, using a small retreat distance of z0,n=−1 mm to prevent singular gradients, and the source strengths encoded in the network weights are initialized with the LN solution  of equation (12). This allows for a fair comparison between the PNN and the VN, which exploits a similar approximate solution of equation (31) as a starting point.

of equation (12). This allows for a fair comparison between the PNN and the VN, which exploits a similar approximate solution of equation (31) as a starting point.

Hyperparameters

For training, the PNN requires a manual choice of gradient step sizes for the weights w, as well as for each of the Cartesian coordinates in the biases bn=[x0,n,y0,n,z0,n]T, except for z0,n, which remains fixed at z0,n=−1 mm ∀n∈N, on purpose. Moreover, one has to choose the weight ψ of the sparsifying model complexity loss, as well as the regularization weight λ of the LN initializer, which is determined according to equation (25). These hyperparameters must be carefully tuned for the problem at hand, and their optimal values are vastly different for the individual test cases that follow. By contrast, the trained VN requires no manual tuning of parameters, and it generalizes well to different scenarios without the need for human intervention.

6 Comparative simulation study